Talk:sRGB

| This It is of interest to the following WikiProjects: | ||||||||||||||||||||||||||||||||||

| ||||||||||||||||||||||||||||||||||

Neutrality

[edit]The debate over sRGB, Adobe RGB and goodness knows what seems to be rather spread out and would benefit from being integrated and tidied up, with a stronger dose of neutrality. This article is still, I think, not neutral (the idea that you can assume sRGB is a little strong, even though I've written software in which that is the default). Notinasnaid 13:16, 31 Aug 2004 (UTC)

You can and should assume sRGB. It's nice to provide an out-of-the-way option for a linear color space that uses the sRGB primaries, and of course anything professional would have to support full ICC profiles. Normal image processing has been greatly simplified by sRGB. The only trouble is that lots of software simultaneously assumes that the data is sRGB (for loading, displaying, and saving) and linear (for painting, scaling, rotation, masking, alpha blending...). This is just buggy though; it wouldn't have been correct prior to the sRGB standard either. 65.34.186.143 17:30, 25 May 2005 (UTC)

- A statement like "You can and should assume sRGB" is obviously not neutral. It is telling people what to do, which is not the business of Wikipedia. The article shouldn't say things like that. At the moment, it still does. —Pawnbroker (talk) 03:41, 11 September 2008 (UTC)

- ??? I don't see anything like that in the article. Dicklyon (talk) 04:40, 11 September 2008 (UTC)

- How about this quote from the article: "sRGB should be used for editing and saving all images intended for publication to the WWW"? To me that seems about the same as saying "You can and should assume sRGB". Also there are various unsourced statements in the article about sRGB being used by virtually everything and everyone, and a vague sentence about the standard being "endorsed" by various organizations. The concept of endorsement is not at all clear. For example, does endorsement just mean that an organization has permitted sRGB to be one of the various color spaces that is allowed be indicated as being used in some application, or does it mean that the organization has declared sRGB to be the most wonderful color space ever and said that everyone should use it exclusively? —Pawnbroker (talk) 17:05, 11 September 2008 (UTC)

- You can and should assume sRGB. That is entirely correct buddy, as the vast majority of devices on the market use sRGB as default or only color space. sRGB should be used for editing and saving all images intended for publication to the WWW. That is also correct in the sense that you should use english to communicate with as many people as possible. Femmina (talk) 03:41, 6 October 2008 (UTC)

- Note: Most people do not speak English [citation needed] ;-) Moreover, at least 80% of people I usually talk with would not understand me if I spoke English...

- Therefore I should consider speaking language of those who I want to understand me, not English... Eltwarg (talk) 21:28, 30 November 2011 (UTC)

- The article "A Standard Default Color Space for the Internet - sRGB" is normative in the HTML4 specification, thus, all standard conforming web browsers should assume images are sRGB if they do not contain a color profile. CSS can also contain the "rendering-intent" for conversion from image color space to display color space, which by default is "perceptual". This does not mean we should use sRGB on the web, it only means that if we use another profile, it must be embedded in the images. --FRBoy (talk) 05:38, 1 December 2011 (UTC)

Limitations of sRGB

[edit]The article says that sRGB is limited, but it doesn't go into detail as to why. I am used to RGB being expressed as a cube with maximum brightness of each element converging at one corner of the cube, producing white. Since white is usually expressed as the combination of all colors, it isn't hard to come to the conclusion that RGB should be able to produce all colors, limited of course by the color resolution, i.e. 4 bits per primary, 8 bits per primary, etc.

- The range of colors you can get from a given color space is called its gamut. All RGB colors can be thought of as having a cube, but the cubes are different sizes. Even if you want to think of them as sharing the white corner, that is (but white is not universal! - see white point). So some RGB spaces cannot express colors that are in other cubes. Hope that makes sense; now, as the article says sRGB is frequently criticised by publishing professionals because of its narrow color gamut, which means that some colors that are visible, even some colors that can be reproduced in CMYK cannot be represented by sRGB. Notinasnaid 14:47, 29 October 2005 (UTC)

The problem I seem to be having is that the article doesn't make it clear that the issue isn't the divvying up of the space encompassed by sRGB, but the position and/or number of the three bounding points Hackwrench 17:33, 30 October 2005 (UTC)

- It probably could be clearer, but first make sure you read the article on gamut which is crucial to the discussion, and rather too large a subject to repeat in this article. Now, with that knowledge, how can we improve the article beyond just saying its narrow color gamut? Notinasnaid 08:24, 31 October 2005 (UTC)

- This text about a limitation is rather odd: designed to match the behavior of the PC equipment commonly in use when the standard was created in 1996. The physics of a CRT have not changed in the interim, and the phosphor chromaticities are those of a standard broadcast monitor, so this off notion of "limited PC equipment" - which seems to crop up all over the Web, often in PC vs. mac discussions - is just incorrect.

- On the other hand, the limited color gamut should be better explained - both as a weakness (significant numbers of cannot be represented) and a strength (the narrower gamut assures less banding in commonly occuring colors, when only 8 bits per component are used). --Nantonos 17:16, 20 February 2007 (UTC)

The gamut limitation essentially means that for some images you have a choice of either a) clipping, removing saturation information for very saturated colours or b) making the entire image greyer. This is not a big issue in practice however, because on the one hand our visual system has the tendency to ‘correct’ itself and if the entire image is greyer you stop noticing and on the other hand most real world images fit well enough in the gamut. Photos look a lot better on my LCD screen than in high-quality print. — Preceding unsigned comment added by 82.139.81.0 (talk) 21:50, 23 March 2012 (UTC)

Years and years ago, I remember much of the wrangling that went into the formation sRGB, and among the chief complaints from the high-end color scientists at the time was that sRGB was zero-relative. Even though there are conversion layers that are placed above sRGB, it is still the case that having sRGB able to "float" somewhat would have allowed a great deal of problem solving flexibility with only a minor loss in data efficiency. When I hear most of the complaint arguments around sRGB, I hear the many conversations I've had in the past with such scientists and engineers that predicted many of them.Tgm1024 (talk) 15:50, 25 May 2016 (UTC)

Camera Calibration

[edit]This article needs a rational discussion of the problem with RELYING on sRGB as if it proves anything about the content of an image -- it doesn't. It's merely a labelling convention -- and what's more, it doesn't even speak to whether or not the LCD on the camera produced a like response. You can manufacture a camera that labels your image 'sRGB' and yet the LCD is displaying it totally differently, and the label isn't even a lie. A consumer camera labelling an image 'sRGB' is no guarantee that you are actually looking at the images in this colourspace on the camera itself! This causes much, much MUCH confusion when people do not get the results they expect. I don't want it to become a polemic against the standard or anything, but putting an sRGB tag on an image file is not a requirement to change anything whatsoever about how the LCD reads. Calibrating an image from an uncalibrated camera is useless, and most consumer cameras are just that, labelled sRGB or not. This is kind of viewpoint stuff, and I don't really want to inject it into the article, but there is a logical hole into which all this falls, and that hole is: Labelling something in a certain manner does not necessarily make it so. This is the problem with sRGB, but people don't understand this because they think of sRGB as changing the CONTENTS of the file, not just affixing the label. So they think that their cameras are calibrated to show them this colourspace, but they aren't nor are they required to. This basic hole in the system by which we are "standardising" our photographs should be pointed out. I have made an edit to do that in as simple and neutral manner as I could think of. The language I came up with is this (some of this wording is not mine but what inherited from the paragraph I modified): "Due to the use of sRGB on the Internet, many low- to medium-end consumer digital cameras and scanners use sRGB as the default (or only available) working color space and, used in conjunction with an inkjet printer, produces what is often regarded as satisfactory for home use, most of the time. [Most of the time is a very important clause.] However, consumer level camera LCDs are typically uncalibrated, meaning that even though your image is being labelled as sRGB, one can't conclude that this is exactly what we are seeing on the built-in LCD. A manufacturer might simply affix the label sRGB to whatever their camera produces merely by convention because this is what all cameras are supposed to do. In this case, the sRGB label would be meaningless and would not be helpful in achieving colour parity with any other device."--67.70.37.75 01:39, 2 March 2007 (UTC)

- I'm not so sure the article needs to go into this, but if so it should say only things that are verifiable, not just your reaction to the fact that not all sRGB devices are well calibrated. The fact that an image is in sRGB space is a spec for how the colors should be interpreted, and no more than that; certainly it's not guarantee of color accuracy, color correction, color balance, or color preference. Why do think people think what you're saying? Have a source? Dicklyon 02:04, 2 March 2007 (UTC)

- I'm afraid your understanding of the issue (no offence) is a perfect example of what's wrong with the way people understand sRGB as it relates to cameras. It's not that some devices' LCDs are not "well calibrated". It's that most all digital camera LCDs are UNcalibrated. It's not usual practice. They don't even try. And this is also true of most high end professional cameras, such as the Canon's 30D and Nikon's D70. I only say 'most' because I can't make blanket statements. But I have never actually encountered a digital camera that even claims a calibrated LCD, and I've used and evaluated a lot of them. As far as I know, a "color accurate" camera LCD doesn't exist (except maybe by accident, and not because of any engineering effort whatsoever). But of course Canon and Nikon don't advertise in their materials that they make no attempt to calibrate what you're shown on the camera itself (and that as a result, the sRGB tag is really not of any use to people who take pictures with their cameras). However, this is common knowledge among photography professionals. Obviously 'common knowlege' is not a citable source, but you wouldn't want a wiki article to contradict it without a citable source, right?! I have looked around for something with enough authority, but I couldn't find it. This isn't really discussed in official channels, probably because it's embarrassing to the whole industry, really. But I did find some links to photographers and photography buffs discussing the situation. The scuttlebutt is universal and accords with my experience: no calibration on any camera LCDs, to sRGB or to anything else. Even on $2000 cameras...

- From different users with different cameras @ [1]: "I know my D1H calibration is not WSYWIG. I get what I see in my PC ... not on the camera's screen"; (re the EOS 10D) "No, not calibrated and not even particularly accurate"; "No [they] are not, the EOS 10D lcd doesn't display colors correctly thats why many use the histogram".

- From [2]: "The only way to be sure is to actually take the shots, process them all consistently, and look at the luminance. Best case would be to shoot a standard target, so you could look at the same color or shade each time. Otherwise, you are guessing about part of your camera that is not calibrated, or intended to use to judge precise exposure."

- From [3]: "users should be aware that the view on the LCD is not calibrated to match the recorded file".

- On the Minolta Dimage 5 from [4]: "The LCD monitor is also inaccurate in its ability to render colors."

- From [5]: "I realize the LCD is not accurate for exposure, so I move to the histogram." (And of course, the histogram is pretty useless for judging things like gamma, so it's no substitute for a viewfinder-accurate colourspace.)

- You hear that last kind of offhand remark all the time on photography forums. Camera LCDs aren't calibrated. They all know it. I rest my case with the realisation that my evidence is anecdotal, but to conclude that argument with a "pretend absence of evidence is evidence of absence" argument: you will not find through Google or through any other search engine any product brochure or advertisement that claims any digital camera has a calibrated LCD. There are no LCD accuracy braggarts -- and there should be, if camera LCD calibration existed. Anyway, I'm happy with the modest statement as it now stands in the article just making the distinction between just having an sRGB colourspace tag affixed to an image, and actually knowing that the image has been composed in the sRGB colourspace, because they are two different things.--65.93.92.146 11:00, 4 March 2007 (UTC)

- I just noticed the new language. It's better than mine, more succinct and more precise, too. Good job.--65.93.92.146 11:17, 4 March 2007 (UTC)

- Thanks. The point is that not being able to trust the LCD is not an sRGB issue, and doesn't make the sRGB irrelevant, so I pruned back your text to what's true. Dicklyon 15:53, 4 March 2007 (UTC)

- Whether or not the camera manufacturers do a good job of producing sRGB is not relevant to the discussion of sRGB itself. A camera manufacturer could also do a bad job of producing Adobe Wide Gamut yet label their output as that, and that is not Adobe Wide Gamut's fault. And the fact is that whether they do it on purpose or accident, the output of the camera *approaches* sRGB, since they want it to look "correct" when displayed on users computer screens and printed on the user's printers. sRGB defines this target much better than a subjective analysis of a screen display and thus serves a very important purpose, whether or not it is handled accurately. Spitzak 16:59, 18 April 2007 (UTC)

easy way to do wide gamut stuff

[edit]Probably the sane thing to do these days, for something like a decent paint program, is to use the sRGB primaries with linear floating-point channels. Then you can use out-of-bounds values as needed to handle colors that sRGB won't normally do. This scheme avoids many of the problems that you'd get from using different primaries. AlbertCahalan 05:05, 5 January 2006 (UTC)

- That seems roughly like a description of scRGB. Cat5nap (talk) 21:18, 23 October 2021 (UTC)

gamma = 2.4?

[edit]I believe the value of the symbol is supposed to be 2.4. However it is not clear at all from the article: at first I thought the value was 2.2. Could someone who knows more than me explain this in the article? --Bernard 21:21, 13 October 2006 (UTC)

- Keep an eye on the article, because people keep screwing this up. The sRGB color space has a "gamma" that consists of two parts. The part near black is linear. (gamma is 1.0) The rest has a 2.4 gamma. The total effect, considering both the 1.0 and 2.4 parts, is similar to a 2.2 gamma. The value 2.2 should not appear as the gamma in any sRGB-related equation. 24.110.145.57 01:38, 14 February 2007 (UTC)

- That's not quite true. The gamma changes continuously from 1.0 on the linear segment to something less than 2.4 at the top end; the overall effective gamma is near 2.2. See gamma correction. Dicklyon 03:07, 14 February 2007 (UTC)

- To avoid confusions, I suggest to replace expression 1.0/2.4 with 1.0/ and declare = 2.4 right after . At least, that would make math unambiguous. Also, an explanation is desired, why 'effective gamma' (2.2) is not the same as letter (2.4). Actually, there is nothing unusual: if you combine gamma=2.4 law with some other transformations, you will get a curve that fits with best some other effective gamma (2.2). Also, note that effective gamma is more important (it does not figure in definition, but it is was the base of the design, see sRGB specification mentioned in article). —The preceding unsigned comment was added by 91.76.89.204 (talk) 21:44, 14 March 2007 (UTC).

- I don't understand your suggestion, nor what confusion you seek to avoid. 2.4 is not the gamma, it is an unnamed and constant-valued parameter of the formulation of the curve. Dicklyon 07:36, 15 March 2007 (UTC)

Gamma & TRC

[edit]The sRGB specifies an ENCODING gamma (Tone Response Curve or transfer curve function) that effectively offsets the 2.4 power curve exponent, very closely matching a "pure" gamma curve of 2.2 — the reason for the piecewise with the tiny linear segment was to help with math near black (with a pure 2.2 curve, the slop at 0 in infinite).

BUT WAIT THERE'S MORE

That piecewise was for image processing purposes. Displays don't care. CRT displays in particular displayed at whatever gamma they ere designed for (somewhere from 1.4 to 2.5ish, depending on system, brand etc. Apple for instance was based around a 1.8 gamma till circa 2008). Most modern displays are about a 2.4 gamma, resulting in a desirable gamma gain for most viewing environments.

AND: most ICC profiles for sRGB use the simple 2.2 gamma. Even Adobe ships simple 2.2 gamma profiles with AfterEffects.

And to be clear: that linearized section is in the black area, with code values so small they are indiscernible. And any visible difference mid-curve is barely noticeable side by side.

The upshot: IF you are going in and out of sRGB space (to linear for instance) it MAY make sense to use the piecewise version. However, using the simple gamma version simplifies code and improves execution speed, which is important if you are doing real-time video processing for instance. --Myndex (talk) 02:37, 21 October 2020 (UTC)

or needs to be scaled ....

[edit]I don't think that scaling the linear values is part of the specification. If the linear values lie outside [0,1], then the color is simply out of the sRGB gamut. Shoe-horning them back into the gamut may be a solution to the problem for a particular application, but it is not part of the specification and it is not an accurate representation of the color specified by the XYZ values. PAR 16:38, 23 May 2007 (UTC)

- OK, I think I fixed it to be more like what I intended to mean. Dicklyon 21:59, 23 May 2007 (UTC)

Formulæ

[edit]I can't see how the matrix formulæ can be correct with X, Y, Z. Suppose I choose X = 70.05, Y = 68.79, Z = 7.78 (experimental values to hand for a yellow sample), then there is no way the matrix will generate values in the range [0, 1]. Sorry if there is something obvious I'm overlooking, but surely the RHS needs to be x, y, and z??? —DIV (128.250.204.118 06:49, 30 July 2007 (UTC)) Amended symbols. 128.250.204.118 01:14, 31 July 2007 (UTC)

- The RGB and XYZ ranges should be about the same (both in 0 to 1, or both in 0 to 100, or both in 0 to 255 or whatever). Of course, the RGB values won't always stay in range. The triples that are out of range are out of gamut. Dicklyon 14:39, 30 July 2007 (UTC)

- I see what you're saying, but it is not what the article currently says. If you have a look, you will see that the first formula uses X, Y and Z, and immediately below is a 'conversion' to (back-)compute these parameters if you start off with x, y and Y. To me there is only one way of reading this which is that the first formula uses the tristimulus values (X, Y and Z), and not chromaticity co-ordinates (x, y and z) — where the present naming follows the CIE. And, indeed, this is what the article currently says. Yet the article further states just below this that:

"The intermediate parameters , and for in-gamut colors are in the range [0,1]",

- and at the end of that sub-section that

the "gamma corrected values" obtained by using the formulæ as stated "are in the range 0 to 1".

- Chromaticity co-ordinates obviously have a defined range due to the identity . There is no equivalent for the tristimulus values.

- A further disquiet I have concerns the implication that the domains scale 'nicely', considering that is not allowed, whereas is allowed.***

- Dicklyon, your answer sounds like it supports my initial finding. At present, then, the article is incorrect and misleading. If what you say is correct, then the article text and formulæ should be amended to suit. If the matrix formula is supposed to be generic, then you'd be better off writing the two vectors as simply C and S, and then say that when C is such-and-such, S is this, and when C is such-and-such other, then S is that, ....(et cetera)

- —DIV (128.250.204.118 01:36, 31 July 2007 (UTC))

- ***At least, the article implies it's allowed. In contrast, CIE RGB doesn't allow it; explained nicely at the CIE 1931 article. —DIV (128.250.204.118 01:52, 31 July 2007 (UTC))

I added a note to indicate the XYZ scale that is compatible with the rest. The XYZ value example for D65 white is already consistent with the rest. You are right that you can't have x=y=z=1 but can have r=g=b=1 (that's called white). Dicklyon 02:48, 31 July 2007 (UTC)

Why the move from "sRGB color space" to "sRGB"?

[edit]What's the benefit? Why was this done with no discussion? Why was it marked a "minor edit"? --jacobolus (t) 00:47, 28 July 2007 (UTC)

- Agree, this is inconsistent with the other articles. On the plus side, it avoids conflict over the use of "colour" versus "color" ;-)p

- —DIV (128.250.204.118 05:47, 30 July 2007 (UTC))

Discrepancy between sources

[edit]I noticed a discrepancy between the W3C source and the IEC 61966 working draft. Using the notation of the article, the W3C source specifies the linear cutoff as Clinear ≤ 0.00304, while the IEC 61966 source specifies the linear cutoff as Clinear ≤ 0.0031308, as used in the article. Does anyone know why the discrepancy exists or why the article author chose the cutoff from the IEC working draft? Thanks. WakingLili (talk) 19:48, 31 August 2007 (UTC)

- I don't recall exactly, but I think someone made a mistake in one of the standards. As the article says (or values in the other domain), "These values are sometimes used for the sRGB specification, although they are not standard." You could expand that to add the numbers and sources that you are speaking of. Dicklyon 20:17, 31 August 2007 (UTC)

- I just compared the W3C spec with the IEC 61966 draft. They use the same formulas for each part of the piecewise function, the only difference is the cutoff points. I checked the math, and the IEC draft has the correct intersection point between the linear and geometric curves. The W3C source is incorrect, which is unfortunate considering more people are likely to read it than the authoritative (but not freely available) IEC standard. The error is ultimately pretty small, though, restricted to the region near the transition point. --69.232.159.225 19:25, 16 September 2007 (UTC)

- Good work! Please feel free to add that explanation to the article. --jacobolus (t) 19:50, 16 September 2007 (UTC)

- Which numbers are you saying are correct? Do they result in a slope discontinuity at the intersection of the curves, or not? It would be good to say. Dicklyon 20:49, 16 September 2007 (UTC)

- Actually, is the IEC 61966-2-1 draft from 1998 different from the IEC 61966-2-1:1999 spec? Does anyone have access to the spec, who could check this out? Maybe the current spec was changed after that draft and the W3C is repeating the correct version?? --jacobolus (t) 21:03, 16 September 2007 (UTC)

- I just noticed the w3c doc is from 1996, before sRGB was standardized. Looks like they had some better numbers then, but it didn't quite get standardized that way; their value 12.92 was not accurate enough to make the curve continuous, and rather than fix it they changed the threshold to achieve continuity when using exactly 12.92 and 1/2.4; the result is a slope discontinuity, from 12.92 below the corner to 12.7 above, but at least the curve is continuous, almost exactly. Dicklyon 21:35, 16 September 2007 (UTC)

- I just compared the W3C spec with the IEC 61966 draft. They use the same formulas for each part of the piecewise function, the only difference is the cutoff points. I checked the math, and the IEC draft has the correct intersection point between the linear and geometric curves. The W3C source is incorrect, which is unfortunate considering more people are likely to read it than the authoritative (but not freely available) IEC standard. The error is ultimately pretty small, though, restricted to the region near the transition point. --69.232.159.225 19:25, 16 September 2007 (UTC)

I have both the 4th working draft IEC/4WD 61966-2-1 (which is currently available on the web) and the final IEC 61966-2-1:1999 document in front of me. These two sources agree on the numerical values of the transformation and with what is currently (after my edit of 8/22/10) on the wikipedia page in the "Specificaiton of the transformation" section. (The draft version does neglect to mention that when converting numbers in range [0-1] to digital values, one needs to round. This is explicit in the final version.) As described under the "Theory of the transformation" section, the value of K0 in 61966-2-1:1999 differs from value given in ref [2]. In addition, the forward matrix in [2] differs slightly from that in 61966-2-1:1999. The reverse matrix is the same in [2] and 61966-2-1:1999. I don't know what the origin of the difference in the forward matrix values is. I did verify that if one takes the reverse matrix to the four decimal places specified and inverts it (using the inv() function in Matlab) and round to four places, you get the forward matrix in 61966-2-1:1999. It is possible (but pure speculation on my part) that the forward matrix in [2] was obtained by inverting a reverse matrix given to higher precision than published. DavidBrainard (talk) 02:30, 24 August 2010 (UTC)

Following a suggestion by Jacobolus, I computed the reverse transformation from the primary and white point specifications. This matches (to four places) what is in all of the documents. If you invert this matrix without rounding, you get (to four places) the forward transformation matrix of reference 2 (of the sRGB page, it's Stokes et al., 1996) to four places. If instead you round to four places and invert, you get (to four places) the forward transformation matrix of 61966-2-1:1999. So, that is surely the source of the discrepancy in the forward matrix between the two sources. DavidBrainard (talk) 13:49, 24 August 2010 (UTC)

- It seems to me to make the most sense to do all math at high precision and save the rounding for the last step. What’s the justification for rounding before inverting the matrix? –jacobolus (t) 15:20, 24 August 2010 (UTC)

- I don't know the thinking used by the group that wrote the standard. Their choice has the feature that you can produce the forward transform as published in the standard from knowledge of the reverse transform to the four places specified in the standard. It has the downside that you get less accurate matrices than if you did everything starting at double precision with the specified monitor primaries and white point. This same general issue (rounding) seems to be behind differences in the way the cutoff constant is specified as well. For the transformation matrices, the differences strike me as so small not to be of practical importance, except that having several versions floating around produces confusion. DavidBrainard (talk) 01:54, 25 August 2010 (UTC)

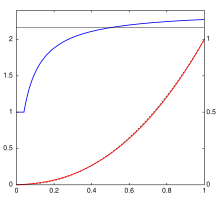

Effective gamma

[edit]

Does anyone actually use this definition for practical purposes? To me it would appear much more logical to define the effective gamma as the solution for the equation g(K) = K^gamma_eff, which is gamma_eff=log g(K)/log K. I.e., not the slope of the curve, but the slope of the line connecting a curve point to the origin. This function is 1.0 at k->0, 1.46 at k=1/255 and exactly 2.2 at k=0.39, and 2.275 for k->1. Han-Kwang (t) 08:58, 13 October 2007 (UTC)

- No, the slope is always what's used. See this book, for example; or this more modern one. These are in photographic densitometry; in computer/video stuff, the same terms were adopted long ago; see this book on color image processing. Try some googling yourself and let us know what you find. Dicklyon 16:32, 13 October 2007 (UTC)

- Usually, to avoid confusing people with the calculus concept of a derivative as a function of level, the gamma is defined as a scalar as the slope of the "straight line portion" of the curve. Depending on where you take that to be for sRGB, since it's not straight anywhere (and has no shoulder hence no inflection point), you can get any value on the curve shown in blue; but in the middle a value near 2.2. Some treatments do omit the straight line bit and talk about derivatives, like this one. Dicklyon 16:41, 13 October 2007 (UTC)

Formulae help

[edit]Could someone please plug the following RGB values, (0.9369,0.5169,0.4710), into the conversion formula? I'm getting strange results (e.g., values returned for XYZ that are greater than 1 or less than 0). SharkD (talk) 07:22, 21 February 2008 (UTC)

- Multiplying the reverse transformation matrix times the above, I get (0.6562,0.6029,0.5274). PAR (talk) 15:26, 21 February 2008 (UTC)

- Oops. I was using the forward transformation matrix instead of the reverse. SharkD (talk) 21:05, 21 February 2008 (UTC)

sRGB colors approximating monochromatic colors

[edit]The link I added to http://www.magnetkern.de/spektrum.html (the visible electromagnetic spectrum displayed in web colors with according wavelengths) was reverted by another user with the reason that a german site is not suitable. The page is bilingual (english and german) and contains references to english sources, so I cannot understand the reasons for deleting the link. See the discussion about the "Wavelength" article. 85.179.9.12 (talk) 11:05, 26 June 2008 (UTC) and 85.179.4.11 (talk) 14:02, 1 July 2008 (UTC)

Use areas

[edit]The very first sentence of the articles is completely ludicrous. I am of course talking about the use of sRGB "on the Internet". Should sRGB also be used on LANs and maybe even RS-232 connections? --84.250.188.136 (talk) 23:59, 14 August 2008 (UTC)

- Sort of ludricous, nevertheless, more meaningful than it might appear, since it refers to both WWW (html page images) and email (image attachment) usage; yes, it would apply to LAN and other inter-computer connections as well. The point is, it's the most common standard for interchanging pictures over networks. Is there a better way to put it? Dicklyon (talk) 00:20, 15 August 2008 (UTC)

- To call sRGB a standard for internet is similar to calling it a standard for compact discs and USB sticks. I believe the "LAN" example by the anonymous guy was just a try to provide some even more funny example of the same kind of mistake.

- I believe color space is here to define transfromation between what is stored as digital data and what is to be shown in reality (printed or rendered on my screen) or what is to be grabbed from reality (camera, scanner).

- Thus mentioning sRGB is a standard for Internet seems absurd and can be seen as an appeal to use sRGB wherever possible because it most probably will "appear" "on internet" at least for several minutes (:-D)

- Following your reasoning by "interchange", saying sRGB is a standard for image files would be more correct here (if it is true).

- But I am getting e-mails with image attachments that are not in sRGB intentionally and the true reason is just the perfect color information interchange (using calibrated devices in apparel industry).

- Also, on Wikipedia (encyklopedia) we should try to distinguish between words like: Internet, WWW, Email, FTP and today also FB, because some younger guys believe Internet IS FaceBook... could be some believe Internet IS Wikipedia :-D)

- I definitely think this needs an update including explanation what is meant by word "internet" and why. Otherwise I am going to remove all occurences of word "internet" from the article.

- Eltwarg (talk) 22:24, 30 November 2011 (UTC)

- Before you get too excited, take a look at the title of the 1996 paper that introduced sRGB (ref. 2 by Stokes). Maybe even read it to see where this is coming from. Dicklyon (talk) 00:55, 1 December 2011 (UTC)

sRGB in GIMP ?

[edit]The article states that sRGB is "well accepted and supported by free software, such as GIMP". Does anyone have anything to back up this statement? From my visual experience, Gimp does not comply with sRGB at all. Sam Hocevar (talk) 22:09, 30 August 2008 (UTC)

- I removed mention of the GIMP and "free software", as I have been trying for months to set up a usable sRGB workflow on a Linux system and completely failed (I ended up writing my own software). Sam Hocevar (talk) 01:29, 1 September 2008 (UTC)

- You are right that GIMP is not doing anything special about sRGB. However I don't understand your complaint about Linux, in fact in most cases sRGB is the *only* colorspace supported, and it is difficult/impossible to get a program to work in a color space that is *not* sRGB. —Preceding unsigned comment added by 72.67.175.177 (talk) 17:12, 16 September 2008 (UTC)

- Open source developers are too dumb to understand what a color space is. In fact I've been using linux for years and I know of no free software that handles colors properly when doing things such as affine transformations on images. Femmina (talk) 03:22, 6 October 2008 (UTC)

- Who cares as long as the terminal is black and the text is white? It's not a matter of stupidity, it's just low priority.. .froth. (talk) 00:31, 16 February 2009 (UTC)

- Open source developers are too dumb to understand what a color space is. In fact I've been using linux for years and I know of no free software that handles colors properly when doing things such as affine transformations on images. Femmina (talk) 03:22, 6 October 2008 (UTC)

- You are right that GIMP is not doing anything special about sRGB. However I don't understand your complaint about Linux, in fact in most cases sRGB is the *only* colorspace supported, and it is difficult/impossible to get a program to work in a color space that is *not* sRGB. —Preceding unsigned comment added by 72.67.175.177 (talk) 17:12, 16 September 2008 (UTC)

NPOV

[edit]I tagged the article NPOV because many of its claims are unsourced and it is so biased it seems to have been written by a Microsoft employee. For example, there is no source that Apple has switched to sRGB. --Mihai cartoaje (talk) 09:06, 30 July 2009 (UTC)

- I've done my best to keep the article sourced and accurate, and I'm no fan of Microsoft. And I don't see anything about Apple in the article. So I reverted the NPOV tag. If there are problems needing attention, please do point them out here or with more specific tags in the article. Dicklyon (talk) 03:32, 31 July 2009 (UTC)

- I don't see any of the above mentioned problems, at least not with the current edition.ChillyMD (talk) 18:42, 27 September 2009 (UTC)

Timeline

[edit]I just added a tentative timeline context in the article, but I'm not sure it's correct. My addition is based on the date of the proposal in reference #2 ("w3c"), i.e. 1996. But the standard proper is dated 1999 (IEC 61966-2-1:1999). And to make matters worse, the w3c reference makes references to earlier, unnamed versions of the same document (see section "sRGB and ITU-R BT.709 Compatibility" in that document). So, when was the standard created? I strongly feel we should place the article in its proper timeline (something it lacked altogether), but I'm not sure which date we should use. --Gutza T T+ 21:50, 29 September 2009 (UTC)

Plot of the sRGB

[edit]The plot and text contain serious errors and need to be corrected. The gamma makes sense in log spaces only. By definition, gamma = d(log(Lout)) / d(log(Lin)). The sRGB conversion function looks naturally just in log space. However, according to an existing tradition, we use the inverse value of gamma that relates to encoding. For example, we use conventional value of 2.199 instead of true value of 0.4547 for the aRGB encoding.

The sRGB gamma in the linear space:

,

where c is the input normalized luminance (0.0031308 < c ≤ 1).

The sRGB gamma in the log2 space:

,

where L is the input normalized luminance, expressed in stops (-8.31925 < L ≤ 0).

When c = 1 or L = 0, the value is maximal: (2.4/1.055)(1.055-0.055) = 2.275.

Alex31415 (talk) 22:12, 7 February 2010 (UTC)

Diagram broken?

[edit]Is the image at the top (the CIE horseshoe) broken for anybody else, or just me?

- It's not "broken" for me, but it's not a very good diagram: its color scheme, text sizes/positions, and line widths make it virtually unreadable, and much of it is dramatically harder to interpret than necessary. Additionally, the coloring inside the horseshoe isn’t my favorite, though this is a problem with no perfect solutions. Finally, it should really use the 1976 (u', v') chromaticity diagram, rather than the 1931 (x, y) diagram. I started working on some better versions of these diagrams at some point, but got distracted. As an immediate step I’d support reverting to some earlier version with readable labels. –jacobolus (t) 01:36, 18 February 2011 (UTC)

- For me I am getting a tiny gray rectangle in the page, about the size of the letter 'I'. reloading the image, clearing the Firefox cache do not seem to fix it. Clicking on the gray rectangle leads me to the page showing the full-size image.Spitzak (talk) 02:21, 18 February 2011 (UTC)

- There are some other suggestions at Wikipedia:Bypass your cache –jacobolus (t) 02:31, 18 February 2011 (UTC)

- Ctrl+Shift+R fixed it, thanks. It was a local problem as I thought...

- There are some other suggestions at Wikipedia:Bypass your cache –jacobolus (t) 02:31, 18 February 2011 (UTC)

Still problems with the matrix

[edit]The matrix seems still to be either wrong or incompletely documented. Is far as I understand the XYZ vectors corresponding to each R,G,B point must yield R = 1 for the R-point etc. In other words, if the XYZ values for the red point, (0.64, 0.33, 0.03) are applied, then from the multiplication rules of matrices with vectors, it must be for red: 3.2406*0.64-1.5372*0.33-0.4986*0.03=1 (first row for the red value) and correspondingly 0 for the others. But actually the matrix yields R = (1.551940,0.000040,-0.000026). While the small non-zero values may be due to rounded values given in the matrix, the 1.55 times too high red value must be due to some systematic error. The other points G, B have values below 1, so no constant-value adjustment will do Could someone please fix this or, if this mis-scaling is a feature rather than a bug, explain this in detail?--SiriusB (talk) 20:18, 4 September 2011 (UTC)

- I think you’re misunderstanding what chromaticity coordinates mean (they aren’t a transformation matrix, for one thing). The numbers and text in this article are fine. –jacobolus (t) 01:06, 5 September 2011 (UTC)

- He misunderstands, but his calculations demonstrate that the matrix does the right thing. It maps the xyz of R to a pure red in RGB, etc., to within a part in 10000 or so. The factors like 1.5 are not relevant, since he started with chromaticities (not XYZ), which lack an intensity factor. The real test is to see if the white point in xyz maps to equal values of r, g, and b. Dicklyon (talk) 01:27, 5 September 2011 (UTC)

- Then there is a step missing between the matrix calculation and the gamma correction: The values have to be rescaled into the interval [0:1] since this is assumed by the gamma formulae.--SiriusB (talk) 06:59, 5 September 2011 (UTC)

- No, you’re still misunderstanding. The chromaticity coordinates tell you where in a chromaticity diagram the three primaries R, G, and B are for a particular RGB color space, but without also knowing the white point they don’t give you enough information to fully transform RGB values to XYZ, because chromaticity coordinates don’t say anything about what the intensity of a light source is (i.e. what Y value to use). For that you should use the transformation matrix in sRGB#The forward transformation (CIE xyY or CIE XYZ to sRGB). –jacobolus (t) 08:26, 5 September 2011 (UTC)

- No, you are still misunderstanding me: Using exact this matrix for the given chromaticity values yields R>1. So, *before* proceeding to the next step *after* using the matrix, namely the gamma correction, one has to rescale these values to make sure that they are in range. Note that the gamma formula assumes that all values are within 0 and 1. If not, please clarify this in the article.--SiriusB (talk) 08:34, 5 September 2011 (UTC)

- What? If you have measured X, Y, and Z (where you have scaled Y such that a value of 1 is the white point, or sometimes in the case of object colors, is a perfectly diffusing reflector), then when you apply the transformation matrix provided, you’ll get just correct values for (linear) R, G, and B. These can then have a gamma curve applied. If you’re still talking about trying to draw a color temperature diagram, however: Given any chromaticity coordinates (x, y) you can pick any value for Y you like, which means when you convert from xyY to XYZ space, you have free reign to scale the resulting X, Y, and Z by any constant you like without changing chromaticity. –jacobolus (t) 18:25, 5 September 2011 (UTC)

- Right, what J said. There will always be X,Y,Z triples that map outside the range [0...1], so some form of clipping is needed. If you interpret an x,y,z as an X,Y,Z, you get such a point in the case of the red chromaticity, but not for the green and blue; it means nothing, since x,y,z is not X,Y,Z. If you want to know what X,Y,Z corresponds to an R,G,B, use the inverse matrix; that will restore the intensity that's missing from chromaticity. Dicklyon (talk) 18:37, 5 September 2011 (UTC)

So far thanks for the replies so far. One additional note: at wrong link Bruce Lindblom's page I have found matrices which may be even more accurate than the ones given in the article. I have tested the ones for sRGB and found that they are accurate in the first six digits, meaning that the zero-coefficients in each direction (xyz to RGB and vice versa) differ from zero no more than 3⋅10-7. However, as far as I understood the earlier discussions on this the present coefficients are considered "official" (since they were derived from some draft of the official standard) while Lindblom's might be from his original research. Any opinions on that?--SiriusB (talk) 19:16, 6 September 2011 (UTC)

- Maybe you intended this link? –jacobolus (t) 19:54, 6 September 2011 (UTC)

- Yes, sorry, had the wrong (sub)link in the clipboard. The illuminant subpage is already linked to from Standard illuminant--SiriusB (talk) 09:13, 7 September 2011

Dynamic range

[edit]The article states that a "12-bit digital sensor or converter can provide a dynamic range [...] 4096". This is not true, as if the code 0 is really an intensity of 0, then the dynamic range is infinite (a division by zero). The next sentence states that the dynamic range of sRGB is 3000:1; can someone point me to a reference? To my knowledge, the Amendment 1 to IEC 61966-2-1:1999 defines white and black intensities of 80 cd/m2 and 1 cd/m2, and thus the dynamic range would be 80:1. --FRBoy (talk) 06:01, 1 December 2011 (UTC)

- Using 12-bits vs. 8-bits doesn’t inherently make any difference to the recordable or representable dynamic range, which depend instead on the properties of the sensor and so on. Obviously though, if you have lots of dynamic range, and not too many bits to store it in, then you end up with some big roundoff error ("posterization"). –jacobolus (t) 16:24, 1 December 2011 (UTC)

Enumerating sRGB gamut

[edit]Hi. For a lecture, I created a video that enumerates all the colors in the sRGB gamut (you may have to store it to disk before viewing). Instead of a diagram showing a certain cut through the gamut, it shows all colors where CIELAB L=const, looping from L=0 to L=100. Of course, the chromaticity corresponding to a given RGB pixel is in the right place in the diagram. The white parts are out-of-gamut colors. If editors find it useful for this or a different article, I can generate an English version specifically for Wikipedia, maybe encoded in a more desirable way, or just as an animated gif. Comments and suggestions very welcome. — Preceding unsigned comment added by Dennis at Empa Media (talk • contribs) 19:36, 30 September 2012 (UTC)

Looking at the user feedback for this article, I could also easily generate a 3D representation of the sRGB solid in CIELAB. Dennis at Empa Media (talk) 14:22, 1 October 2012 (UTC)

- Why did you plot it in terms of x/y? –jacobolus (t) 18:53, 1 October 2012 (UTC)

- Instead of e.g. CIELAB a/b, you mean? It was simply to shed some more light into the chromaticity diagrams. Can be done differently, of course. Dennis at Empa Media (talk) —Preceding undated comment added 17:09, 2 October 2012 (UTC)

Acronym

[edit]Somewhere in the introduction there should be a sentence explaining what 'sRGB" actually stands for. Many computer savvy people can probably figure out the RGB part (though I assert that's assuming more knowledge than many people might have). But what about the "s" part? In general, one should never assume that a reader knows what an acronym expands into. I'd fix it myself, except I don't know what the "s" stands for. — Preceding unsigned comment added by 173.228.6.187 (talk) 23:09, 27 January 2013 (UTC)

- The letter s in sRGB is likely to come from "standard", but I could not find any truly reliable sources stating that. All sources that I found that made a statement about it appeared to assume that that's where it must come from, or from "standardized", or even from "small". Well maybe that ambiguity could somehow be expressed in the article. Olli Niemitalo (talk) 23:51, 27 January 2013 (UTC)

Color mixing and complementary

[edit]If the standard defines a non-linear power response with γ ≈ 2.2, then does it mean that three complementary pairs of the form (0xFF, 0x7F, 0) ↔ (0, 0x7F, 0xFF) in whatever order are blatantly unphysical? Namely, these are:

Incnis Mrsi (talk) 04:56, 18 June 2013 (UTC)

- That is correct. Well, maybe not "blatantly", but it's not right. If you want orange and azure to add up to white you need to use (0xFF, 0xBA, 0) and (0, 0xBA, 0xFF), since (186/255)^2.2 = 0.5. The use of 0x7F or 0x80 for half brightness is a common misconception. You can test this by making color stripes or checkboards of the two colors and looking at from far enough back that you don't resolve the colors. Dicklyon (talk) 05:12, 18 June 2013 (UTC)

- So, what we have to do with aforementioned six colors? There are two solutions:

- State that “true” orange,

lime,spring green, azure, violet, and rose lie on half brightness, but incompetent coders spoiled their sRGB values; - Search for definitions independent from (s)RGB and compare chromaticities.

- State that “true” orange,

- Incnis Mrsi (talk) 05:41, 18 June 2013 (UTC)

- So, what we have to do with aforementioned six colors? There are two solutions:

- There’s no such thing as “true orange”, “true lime”, “true spring green”, etc. The names are arbitrary, and every system of color names is going to attach them to slightly different colors. Though with that said, the X11 / Web color names were chosen extremely poorly, and the colors picked for each name are typically very far from the colors a painter or fashion designer or interior decorator (or a layman) would attach to that name. The biggest problem here is that they tried to choose colors systematically to align with “nice” RGB values, but the colors at the various corners and edges of the sRGB gamut don’t have any special relation to color names in wide use. –jacobolus (t) 07:02, 19 June 2013 (UTC)

- Thanks for your reply. I actually expected something like this except for the wide use qualifier. Wide use by whom do you mean? Painters, fashion designers, interior decorators… I would also add printers and pre-press, are they actually so wide? Incnis Mrsi (talk) 16:27, 19 June 2013 (UTC)

- I just mean, if you want to name some specific pre-chosen color on your RGB display, it’s likely no one has a name that precisely matches that color. So if you just arbitrarily pick a name sometimes used for similar colors, then at the end you get a big collection of (name, color) pairs that don’t match anyone’s idea of what those names should represent. –jacobolus (t) 23:32, 19 June 2013 (UTC)

- Thanks for your reply. I actually expected something like this except for the wide use qualifier. Wide use by whom do you mean? Painters, fashion designers, interior decorators… I would also add printers and pre-press, are they actually so wide? Incnis Mrsi (talk) 16:27, 19 June 2013 (UTC)

- There’s no such thing as “true orange”, “true lime”, “true spring green”, etc. The names are arbitrary, and every system of color names is going to attach them to slightly different colors. Though with that said, the X11 / Web color names were chosen extremely poorly, and the colors picked for each name are typically very far from the colors a painter or fashion designer or interior decorator (or a layman) would attach to that name. The biggest problem here is that they tried to choose colors systematically to align with “nice” RGB values, but the colors at the various corners and edges of the sRGB gamut don’t have any special relation to color names in wide use. –jacobolus (t) 07:02, 19 June 2013 (UTC)

Does red of Microsoft become orange in a spectroscope?

[edit]The illustration, commons:File:Cie Chart with sRGB gamut by spigget.png, shows sRGB’s primary red somewhere near 608 nm. Even if we’ll try to determine the dominant wavelength and draw a constant hue segment from any of standard white points through it to the spectral locus, then it will not meet the latter in any waves longer than 614 nm. Spectroscopists classify waves shorter than 620 (or sometimes even 625) nanometres as orange. Is something wrong in some of these data, or monitors manufacturers blatantly fool consumers with the help of the hypocritical standard?

No, do not say anything about illuminants and adaptations to white points please, standard ones or whatever. A spectral color is either itself or black, everywhere. Under any illuminant. Incnis Mrsi (talk) 16:27, 19 June 2013 (UTC)

- According to the ISCC–NBS system of color designation, the “R” primary of RGB would be called “vivid reddish orange”. You can see where it plots relative to some other landmark colors in the image to the right, which plots colors in terms of munsell hue/value. Likewise, the “G” primary is a yellowish green, and the “B” primary is on the edge between the “blue” and “purplish blue” categories. None of these primaries is close to the typical representative color for “red”, “green”, or “blue”. But that’s sort of irrelevant, since their purpose is not to match a color name. It would be silly to call it the “reddish orange, yellowish green, purplish blue color model”, or more accurately label the secondary colors as “purple, greenish blue, and greenish yellow”. –jacobolus (t) 23:44, 19 June 2013 (UTC)

- They're not that terrible, though. One can wish that Microsoft and HP had defined more extreme colors as the primaries for sRGB, or that monitor manufacturers wouldn't "blatantly fool consumers with the help of the hypocritical standard", but that standard basically just encoded what all the TVs and monitors out there were already doing. The red primary is accepted as "red" by most people. Similarly for green and blue. Probably because it's the reddest red you can get out of the device; etc. And trying to make a longer-wavelength red primary or a shorter-wavelength blue primary is a very inefficient use of power, since the perceived intensity drops rapidly as you try to move the primaries into those corners. I think you'll find the red primaries on many LED displays, on phones and such, even more orange for that reason. Dicklyon (talk) 23:54, 19 June 2013 (UTC)

- Yep, exactly. The primaries are just fine considering their purpose, and calling them R, G, and B is relatively unambiguous, even if it occasionally causes confusion like Incnis Mrsi is experiencing. –jacobolus (t) 23:59, 19 June 2013 (UTC)

- “Purplish blue” in reference to B is an

nonsensicalartefact of Munsell notation, or similar ones. This color has the same hue as an actual mix of blue with purple could have, but it is indigo, one of recognized spectral colors. Incnis Mrsi (talk) 09:35, 20 June 2013 (UTC)- I realize it’s probably not intentional, but could you please stop using loaded language like “blatantly fool”, “hypocritical”, “nonsensical artefact”, and similar? It’s obnoxious and makes me want to stop talking to you. “Purplish blue” is the ISCC–NBS category (the ISCC–NBS system is one of the only well-defined, systematic methods of naming colors, is in my opinion quite sensible (was created by a handful of experts working for the US National Bureau of Standards with quite a bit of input/feedback from all the experts in related fields they could find), and is in general more useful than the arbitrary set of “spectral color” names invented by Isaac Newton). In any event, “Indigo” would also be a reasonable name for this color. The point is it’s not particularly close to what would typically be called “blue”. –jacobolus (t) 11:36, 20 June 2013 (UTC)

- Well, subtractive-oriented (or pigment-oriented), not nonsensical: an additive-minded expert does not see a sense in defining the set of as many as 8 primary terms (for saturated colors at full lightness) which misses violet. You think ISCC–NBS has more sense than works of Newton, but I think the only Newton's mistake was that he did not specify a quantitative description of his terms. Incnis Mrsi (talk) 17:15, 20 June 2013 (UTC)

- Incnis, you’re getting caught here by the amount of color science that you currently don’t understand, and it’s leading you to make statements which don’t make sense to anyone who has studied an introductory color science textbook. You’re stuck in a 19th century mindset about how color vision works. I recommend you read through that handprint.com site, or if you like I can suggest some paper books. –jacobolus (t) 06:39, 22 June 2013 (UTC)

- Well, subtractive-oriented (or pigment-oriented), not nonsensical: an additive-minded expert does not see a sense in defining the set of as many as 8 primary terms (for saturated colors at full lightness) which misses violet. You think ISCC–NBS has more sense than works of Newton, but I think the only Newton's mistake was that he did not specify a quantitative description of his terms. Incnis Mrsi (talk) 17:15, 20 June 2013 (UTC)

- I realize it’s probably not intentional, but could you please stop using loaded language like “blatantly fool”, “hypocritical”, “nonsensical artefact”, and similar? It’s obnoxious and makes me want to stop talking to you. “Purplish blue” is the ISCC–NBS category (the ISCC–NBS system is one of the only well-defined, systematic methods of naming colors, is in my opinion quite sensible (was created by a handful of experts working for the US National Bureau of Standards with quite a bit of input/feedback from all the experts in related fields they could find), and is in general more useful than the arbitrary set of “spectral color” names invented by Isaac Newton). In any event, “Indigo” would also be a reasonable name for this color. The point is it’s not particularly close to what would typically be called “blue”. –jacobolus (t) 11:36, 20 June 2013 (UTC)

- They're not that terrible, though. One can wish that Microsoft and HP had defined more extreme colors as the primaries for sRGB, or that monitor manufacturers wouldn't "blatantly fool consumers with the help of the hypocritical standard", but that standard basically just encoded what all the TVs and monitors out there were already doing. The red primary is accepted as "red" by most people. Similarly for green and blue. Probably because it's the reddest red you can get out of the device; etc. And trying to make a longer-wavelength red primary or a shorter-wavelength blue primary is a very inefficient use of power, since the perceived intensity drops rapidly as you try to move the primaries into those corners. I think you'll find the red primaries on many LED displays, on phones and such, even more orange for that reason. Dicklyon (talk) 23:54, 19 June 2013 (UTC)

Multiply and divide by 255: quick and dirty?

[edit]The other day I made what seemed like a minor correction to the section entitled Specification of the transformation. The text said, "If values in the range 0 to 255 are required, e.g. for video display or 8-bit graphics, the usual technique is to multiply by 255 and round to an integer." I changed it to "multiply by 256." Shortly thereafter the edit got reverted, apparently by a Silicon-Valley engineer who has contributed a great deal to Wikipedia articles on computer graphics.

Looking back on it, I can see that my edit wasn't quite correct. It should be been "multiply by 256 and subtract 1." I also forgot to correct the other problem sentence nearby, "(A range of 0 to 255 can simply be divided by 255)" That should be something like "add one, then divide by 256."

This is analogous to the problems one encounters when working with ordinal numbers, when things need to be scooted over by one before multiplying or dividing, then scooted back again.

The method described here is probably close enough that no one would ever notice the difference. I'm reminded of conversations I've had over the years with German engineers who are so particular about doing things correctly. Then they go nuts when they run into an engineer from another country who uses a "quick and dirty" method that's way easier and works every time. Zyxwv99 (talk) 17:20, 22 July 2013 (UTC)

- Your edit was obviously not correct, as the nearest integer to 1 × 256 is, surprisingly, 256 (i.e. out of range). So it would be for any input from (511/512,1]. As an alternative we can multiply by 256 and then apply the floor function instead of rounding, but disadvantage of this composition is that it works only on [0,1) and fails when input is exactly equals to 1. Why do not leave Dicklyon’s version in place, indeed? Incnis Mrsi (talk) 17:56, 22 July 2013 (UTC)

- The standard approach (universally considered the most "correct", though it's not perfect) is to map the range of floats [0, 1] onto the range [0, 255] and then round to the nearest integer. Multiplying by 256 and subtracting 1 would take 0 to -1, so you'd need to round it up, and you wouldn't have any distinction between 0/256 and 1/256.

- To reverse this operation, we just divide by 255.

- Note that this sort of thing is the reason that Adobe Photoshop's "16-bit" mode uses the range [0, 32768] instead of [0, 65535]. They then have a precise midpoint, and can do some operations using simple bit shifting instead of multiplication/division. –jacobolus (t) 19:49, 22 July 2013 (UTC)

- I believe Zyxwv99 is confused by the conversion of 16 bits to 8, which indeed is better to shift right by 8 and (equivalent to divide by 256 and floor). This is because each of the 256 results has 256 numbers in it. The seemingly more accurate "multiply by 255.0/65535 and round" will not evenly space the values (0 and 255 have about 1/2 the values mapping to them) and this causes errors later on. However this does not apply to floating point, where the correct conversion is "multiply by 255 and round". It is true there are tricks that you can do in IEEE floating point to produce the same result as this using bit-shifts and masking but it is still the same result and such tricks are probably outside this page's scope.Spitzak (talk) 20:28, 22 July 2013 (UTC)

- Converting from 16 bits to 8 is a bit tricky, and there's not an obvious “right” way to do it (just making even-width intervals compress down to each points creates problems too, at the ends), with different programs choosing to handle it in different ways. But yeah, the thing to do in floating point is relatively uncontroversial. –jacobolus (t) 05:16, 23 July 2013 (UTC)

- The edit I reverted is the opposite of what you're discussing: [6]. But wrong for a similar reason: dividing the max value 255 by 256 would not give you a max of 1.0, which is usually what you want. Dicklyon (talk) 05:53, 23 July 2013 (UTC)

- Thanks. I get it now. Zyxwv99 (talk) 15:00, 23 July 2013 (UTC)

Other colour spaces than CIE 1931

[edit]Are there any attempts or proposals to transform sRGB into more recent colour spaces, like e.g. the CIE 1964 10-degrees standard observer? And are there any standard spectra for the R,G,B primaries (like the D65 standard illuminant for the whitepoint), which would be required for such a transformation?--SiriusB (talk) 08:49, 19 August 2013 (UTC)

- Is the spectrum necessary to locate a colour in the CIE 1964 or similar colour space? Are these spaces not based on the same tristimulus response functions as CIE 1931? Illuminants require the actual spectrum (not only X,Y,Z) because an illuminant maps pigment colours to light colours and such mapping has much more than only 3 degrees of freedom (see metamerism (color) for examples). Neither R,G, or B is not designed to illuminate anything. Incnis Mrsi (talk) 14:53, 19 August 2013 (UTC)

- I think instead of spectrum, you might mean spectral power distribution. In any case, primaries are defined in terms of XYZ (or Yxy), so they represent a color sensation, which can be created by any number of spectral power distributions. So the XYZ color sensation is standardized, not the SPD. One of the purposes of XYZ color space is to predict the color sensation produced by mixing two other color sensations. SPD is not necessary for this. But yes, illuminants like D65 are defined by a SPD. Fnordware (talk) 02:12, 20 August 2013 (UTC)

- The 1964 space does not use the same 3D subspace as the 1931 space, so there is not an exact conversion, in general. I don't know of attempts to make good approximate conversions, which would indeed need spectra, not just chromaticities. Spectra of sRGB-device primaries would let one find a conversion, but it would not necessarily be a great one in general. On the other hand, the differences are small. Dicklyon (talk) 04:51, 20 August 2013 (UTC)

@Incnis Mrsi: Yes, I am (almost) sure that the SPD is required since the color-matching functions (CMFs) do differ themselves. The simplest attempt would be to reproduce the primary chromaticities by mixing monochromatic signals with equal-energy white. This is essentially what you get if you interpolate the shifts between monochromatic signals (the border curve of the color wedge) and the whitepoint in use (for E it would be zero since E is always defined as x,y = 1/3,1/3). This can be done with quite loq computational effort. However, the more realistic way would be to fit Gaussian peaks to match the CIE 1931 tristimuli, or better, to use standardized SPDs of common RGB primary phosphors.

@Dicklyon: Sure, one would need a standardisation of RGB primary SPDs since different SPDs like the whitepoint illuminants have been standardizes. At least the letter should definitively adjusted when using CIE 1964 or the Judd+Vos corrections to the 1931 2-deg observer, since otherwhise white would no longer correspond to RGB=(1,1,1) (or #FFFFFF in 24 bit hex notation). Furthermore, even the definition of the correlated color temperature may differ, especially for off-Planckian sources (for Planckian colors one would simply re-tabulate the Planckian locus). On the other hand, the color accuracy of real RGB devices (especially consumer-class displays etc.) may be even lower than this. Maybe there has not yet been a need for a re-definition yet. Howecer, according to the CRVL there are proposed new CMFs for both 2 deg and 10 deg observers, whichs data tables can already be downloaded (or just plotted) there.--SiriusB (talk) 11:18, 20 August 2013 (UTC)

Cutoff point

[edit]The cutoff point 0.0031308 between the equations for going from to doesn't seem quite right, since it should be where the curves intersect, which is actually between 0.0031306 and 0.0031307. A value of 0.0031307 would be closer to the actual point at about 0.0031306684425006340328412384159643075781075825647464610857164606110221623262003677722151859371187949498. It might make sense to give the cutoff point for the reverse equations 0.040448236277108191704308800334258853909149966736524277227456671094406337254508751617020202307574830752 with the same number of digits as the other cutoff point, as in, 0.040448. Κσυπ Cyp 16:06, 18 November 2013 (UTC)

- It would be best to make sure we give exactly the numbers in the standard. Dicklyon (talk) 22:13, 18 November 2013 (UTC)

- I agree, just stick with what the standard says, otherwise you are doing original research. If you found a reliable source that points out this discontinuity, you could mention that. But I would point out that the difference is small enough to be meaningless. In the conversion to sRGB, it is off by 0.00000003. For even a 16-bits per channel image, that would still only be 1/50th of a code value. So it would truly not actually make any difference at all. Fnordware (talk) 02:09, 19 November 2013 (UTC)

common practice discretizing

[edit]The article mainly focusses on mapping sRGB components in a continuous [0,1] interval, but of course all sRGB images are stored in some sort of digital format and they often end up with 8 bits per component, at some point. I am curious whether there were any official or commonly accepted guidelines on the discretizing to integers? It's of course possible that sRGB never specified how to quantise. If it is only an analog display standard, then this is not their problem.

Regarding the inverse process (discrete to continuous) I suppose it is fairly obvious that, e.g., for integers in the [0, 1, ..., 255] range one should simply divide by 255. That way the endpoint values 0 and 1 are both accessible. As with codecs, the goal of the digitizing (encoding) process is to produce the best result out of that defined inverse (decoding) process. If so, the rounding technique would depend on which error you want to optimize for. I can think of numerous approaches--

- Multiply by 255 and round. This divides the interval [0,1] into 256 unequal-sized intervals. The first and last are [0,1/510) and [509/510,1] respectively, while all intervals inbetween have length of 1/255.

- Multiply by 256, subtract 0.5, and round. This divides the interval [0,1] into 256 equal-sized intervals. I.e. [0,1/256) maps to 0, [1/256,2/256) maps to 1, etc.

- Round to minimize intensity error. To minimize error after the sRGB->linear conversion, we should be careful that rounding up and rounding down by the same amount in sRGB does not give the same amount of change in intensity. To compensate for this we would tend to round down more often.

- Dithering of sRGB. Some sort of dithering has to be used if one wants to avoid colour banding.

- Intensity dithering. Along those lines, to produce a more accurate intensity on average, we would instead pick an integer randomly from a distribution such that the average intensity corresponds to the desired intensity. The nonlinearity means this is distinct from dithering for desired sRGB. [7]

- Minimize perceptual error. Given that human perception is nonlinear in intensity, maybe something else.

Is is really true that, as the article says, "the usual technique is to multiply by 255 and round to an integer" ? It's simply stated uncited at the moment, I would hope for something like "the sRGB standard does not specify how to convert this to a digitized integer[cite], but the standard practice for an 8-bit digital medium is to multiply by 255 and round to an integer[cite]". I'm not sure that this is correct, as a google search for sRGB dither uncovers that dithering is used in many applications. --Nanite (talk) 11:50, 23 December 2014 (UTC)

Display gamma versus encoding gamma

[edit]I recently made edits to the page to fix a common misconception that the gamma function currently described in the page is supposed to be used for displaying sRGB images on a monitor. This is not the case: the display gamma for sRGB is a 2.2 pure power function. It is different from the encoding gamma function, which is what's currently on the page (with the linear part near black).

When I made this modification, I backed it up using two sources:

- sRGB working draft 4 (which I used as a proxy for the real spec since it's behind a paywall), which clearly states the expected behavior of a reference display on page 7 (§2.1 Reference Display Conditions). It mandates a pure power 2.2 gamma function. Meanwhile, the section about encoding is the one that contains the gamma function with the linear part at the beginning and the 2.4 exponent. This is further confirmed by §3.1 (Introduction to encoding characteristics): "The encoding transformations between 1931 CIEXYZ values and 8 bit RGB values provide unambiguous methods to represent optimum image colorimetry when viewed on the reference display in the reference viewing conditions by the reference observer", which means that the encoded values are meant to be viewed on the reference display, with its pure power 2.2 gamma function.

- Mentions of sRGB EOTF in Poynton's latest book (which I own), which repeatedly states "Its EOCF is a pure 2.2-power function", "It is a mistake to place a linear segment at the bottom of the sRGB EOCF". On page 51-52 there is an even more detailed statement, which reads as follows:

Documentation associated with sRGB makes clear that the fundamental EOCF - the mapping from pixel value to display luminance - is supposed to be a 2.2-power function, followed by the addition of a veiling glare term. The sRGB standard also documents an OECF that is intended to describe a mapping from display luminance to pixel value, suitable to simulate a camera where the inverse power function's infinite slope at black would be a problem. The OECF has a linear segment near black, and has an power function segment with an exponent of 1/2.4. The OECF should not be inverted for use as an EOCF; the linear slope near black is not appropriate for an EOCF.

As many will confirm, Poynton is widely described as one of the foremost experts in the field (especially when it comes to gamma), which makes this a very strong reference.

To be honest, I fell for it as well and was convinced for a long time that the gamma function with the linear part was supposed to be used for display. But upon reading the standard itself, it appears this is completely wrong, and Poynton confirms this assertion. BT.709 has the exact same problem, i.e. the gamma function described in BT.709 is an OECF, not an EOCF - the EOCF was left unspecified until BT.1886 came along and clarified everything.

User:Dicklyon reverted my edit with the following comment: "I'm pretty sure this is nonsense". Since I cite two strong references and he cites none, I am going to reintroduce my changes.

E-t172 (talk) 20:33, 25 December 2014 (UTC)

- Poynton is a well-known expert, but he is wrong here; his statements on page 12 are not supported by what the sRGB standard says, nor by any other source about sRGB. The cited draft only mentions 2.2 in the introduction; it is not a part of the standard itself. The concept doesn't even make sense. sRGB is an output-referred color space. The encoding encodes the intended display intensity via the described nonlinear function. Dicklyon (talk) 20:47, 25 December 2014 (UTC)

- The cited draft mentions 2.2, along with the explicit pure power function, in section 2.1. Section 2.1 is fully normative. It is certainly not the introduction, which is section 1 (though the introduction also mentions 2.2). So yes, his statements are fully supported by the standard. The concept makes perfect sense because, as Poynton explains, you can't encode sRGB values from a camera with a pure power law for mathematical reasons (instability near black). This is why a slightly different function needs to be used for encoding as opposed to display. But since you were mentioning the introduction, here's what the introduction says: "There are two parts to the proposed standard described in this standard: the encoding transformations and the reference conditions. The encoding transformations provide all of the necessary information to encode an image for optimum display in the reference conditions." Notice "display in the reference conditions". "Reference conditions" is section 2, including the pure power 2.2 gamma function. I therefore rest my case. E-t172 (talk) 21:05, 25 December 2014 (UTC)

- I see my search failed to find it since they style it as "2,2" and I was searching for "2.2". I see it now in the reference conditions. But I still think this is a misinterpretation. The whole point of an "encoding" is that it is an encoding of "something"; in this case, sRGB values encode the intended output XYZ values. If the display doesn't use the inverse transform, it will not accurately reproduce the intended values. I believe that it is saying that a gamma 2.2 is "close enough" and is a reference condition in that sense, not that it is ideal or preferred in any sense, which would make no possible sense. I've never seen anyone but Poynton make this odd interpretation. Dicklyon (talk) 23:27, 25 December 2014 (UTC)

- Saying that the reference conditions are ideal for viewing sRGB implies that a veiling glare of 1 % is ideal. The money says that it is not. People will pay to get a better contrast ratio in a darkened home theater. Olli Niemitalo (talk) 00:12, 26 December 2014 (UTC)

- What "people" prefer has no bearing on accuracy. Besides, sRGB is not designed for home theater - it's designed for brightly lit rooms (64 lx reference ambient illuminance level). The reference viewing conditions simply indicate that correct color appearance is achieved by a standard observer looking at a reference monitor under these reference viewing conditions. Whether it is subjectively "ideal" or not is irrelevant - the goal of a colorspace is consistent rendering of color, not looking good. Theoretically, you can use sRGB in an environment with no veiling glare, as long as you compensate for the different viewing conditions using an appropriate color transform (CIECAM, etc.) so that color appearance is preserved. E-t172 (talk) 17:40, 27 December 2014 (UTC)

- Finding a source that specifically refutes Poynton is hard, of course, but many take the opposite, or normal classical interpretation viewpoint. For example Maureen Stone, another well-known expert. Dicklyon (talk) 00:39, 26 December 2014 (UTC)

- Here's another source about using the usual inverse of the encoding formula to get back to display XYZ: [8]; and another [9]; and another [10]. Dicklyon (talk) 05:35, 26 December 2014 (UTC)

- I see. Well, I guess we have two conflicting interpretations in the wild. I suspect that your interpretation (the one currently on the page) is the widely accepted one, and as a result, it most likely became the de facto standard, regardless of what the spec actually intended. So I'll accept the statu quo for now. E-t172 (talk) 17:40, 27 December 2014 (UTC)

- Whichever one is correct officially, an inverted sRGB transfer function makes a lot more sense physically and, at least more recently, pragmatically. Perhaps a 2.2 function made sense with high-contrast CRT's, but the ghastly low contrasts of common modern displays really favor a fast ascent from black. Even on my professional IPS, the few blackest levels are virtually indiscernable when not set to sRGB mode. 128.76.238.18 (talk) 23:45, 2 August 2019 (UTC)

- I see. Well, I guess we have two conflicting interpretations in the wild. I suspect that your interpretation (the one currently on the page) is the widely accepted one, and as a result, it most likely became the de facto standard, regardless of what the spec actually intended. So I'll accept the statu quo for now. E-t172 (talk) 17:40, 27 December 2014 (UTC)

Just to add to this controversy, Poynton has been quoted as saying he reversed his earlier position regarding the piecewise, now favoring its use, and we have Jack Holm of the IEC saying that the EOTF should be the piecewise... HOWEVER, I don't have context for Dr. Poynton's quote, and Jack Holm is not normative, the standard as voted on and approved is the only normative thing here. The standard states the reference display uses a simple gamma of 2.2, and this is reiterated in the introduction to the published standard that anyone can read for free in the preview here: https://www.techstreet.com/standards/iec-61966-2-1-ed-1-0-b-1999?product_id=748237#jumps

Click on "preview" and on page 11 for english, the last paragraphs states "...The three major factors of this RGB space are the colorimetric RGB definition, the simple exponent value of 2,2, and the well-defined viewing conditions..." emphasis added.